#pip install torch torchvision12 Deep Learning

12.1 Deep Learning (DL)

This section was prepared by Matthew Anzalone: final semester MsQE (Master of Science in Quantitative Economics) student. His research interests include community cohesion as predictors of economic outcomes, market concentration, and health economics.

12.1.1 Early DL History

Deep learning owes its existence to the original 1943 work of Warren McCulloch and Walter Pitts, who built the first artificial, electronic neural network. Although, it was not until the 1970s that deep learning got its start, following the work of Alexy Ivakhnenko and V. G. Lapa in 1967. 1979 saw the development of the first functional deep learning model in Kunihiko Fukushima’s “Neocognitron”.

12.1.2 Modern DL History

However, this was just the primordial roots of deep learning. It was not until 1989, when Yann LeChun, et al. implemented backpropagation into neural networks that the deep learning we are familiar with today got its start. There were still hurdles, though. The hardware of the 1990s was not up to speed with what was required to run deep learning models at anything resembling an acceptable speed. The modern advent and improvement of GPUs (graphical processing units), especially by Nvidia, allowed for the eventual explosion of machine learning and AI that we observe today. Such GPUs allow for running calculations simultaneously and in parallel, thus increasing speed and making calculations feasible.

12.1.3 Neural Networks vs. Deep Learning

How are deep learning models different from neural networks? Neural networks are not different from deep learning models, and are, in fact, the principle building block of DL models. The scope and depth of the neural networks are the only thing that separates neural networks from Deep learning models. DL models are defined (according to IBM) as the training of neural network models with at least four layers.

The advantage of DL models comes from the intermediate, hidden layers, where most of the delicate comparisons take place. These intermediate layers allow for a much more rigorous understanding of the input data, concentrating the essence of the interactions from the broader surface-layer nodes’ values to increasingly accurate outputs (depending on the number of intermediate layers).

Because deep learning is an extension of neural networks, training DL models also rely on backpropagation and gradient descent.

12.1.4 Types of Deep-learning Models

12.1.4.1 Convolutional Neural Networks

In essence, this method concentrates information down from a large number of nodes to a smaller sub-selection of nodes, that is, it creates convolution layers. These convolution layers are then used to preserve information on a larger scale, resulting in dimension reduction and allowing for efficient calculations from large, multi-factor datasets. One of the things this method is historically used for is image classification and recognition, as it allows one to break up images into many large parts, rather than looking at each pixel individually.

12.1.4.2 Recurrent Neural Networks

This method allows for sequential calculations from one node to the next. Therefore, it is useful for time-sequenced data or language recognition. For example, in a subject such as education economics, data might be years of education for individuals over time. Each additional year of education might depend on the GPA of the last year of education (IE: one will pursue a bachelors degree if they receive consistently high grades in an associates degree). Therefore, the model would need to understand each previous year in sequence to be able to predict the eventual final years of education for an individual.

12.1.4.3 Generative adversarial networks

These are used to generate new data, based on some example training data. The implementation is extremely interesting, in that the model pits two neural networks against one another until the testing network cannot accurately decide whether the data is artificially generated or part of the training set. This sounds amazing, but training such a model is, according to IBM, difficult and unstable. This method can be used with image generation and, arguably, seals our collective fate for eventually not being able to tell real pictures from AI-generated ones.

12.1.5 Current AI Surge and Transformer Models

The current surge in AI infrastructure and consumer AI models can be traced back to the 2017 Google paper “Attention is All You Need”. (Nearly all of the authors of the paper went on to found, co-found, or become CEO of highly successful AI companies). The paper outlines a new architecture for deep learning: the transformer model. The new methods are responsible for the existence of GPT (generative pre-trained transformer) models, such as ChatGPT. The advantage of the transformer model is its attention layers, which specify which nodes are closely related to other nodes. That is, the attention layer specifies which parts of the data interact with one another, meaning it can focus on certain interactions as more important to final output, increasing efficiency and accuracy. According to IBM, “…transformers don’t use recurrent layers; a standard transformer architecture uses only attention layers and standard feedforward layers, leveraging a novel structure inspired by the logic of relational databases.” That is, transformer models are more efficient than the recurrent neural networks which they succeeded.

12.1.6 Why Transformers?

Recurrent networks can only focus on one part of a sequence at a time, making them less scalable. However, transformers allow for a model to examine an entire sequence at once, and then focus on the most meaningful interactions for final output. This ability of transformers fits perfectly with the function of GPUs, as it allows for the simultaneous calculations of the transformer to run on the simultaneous hardware of the GPU.

The attention mechanism of transformers runs in a few successive steps:

+ reading data and converting into vector features,

+ determining interactions between vector features,

+ assigning interactions scores for feature combinations,

+ and finally assigning an attention weights for features.

This continues until the model arrives at accurate attention weights for final outputs, via backpropagation and gradient descent.

Queries and keys are used to compare a data sequence. The query is used as the starting point for retrieval of attention interactions. Keys represent the value of the token or “cell”. The output is then scaled by the attention weight, and the final output is passed on to the next node/layer, or the final output. (Tokens are how models like LLMs store information, such as words.)

This method can be extended to examples of image classification, which is used here to demonstrate the meaning of attention, query, and key.

12.1.7 Pytorch and implementation of transformer model

12.1.7.0.1 Installation of Pytorch

Go to the installation and startup documentation page and follow the installation steps, then run the code below, as outlined by the installation page.

The documentation states that a NVidia GPU is suggested for GPU processing, so this overview will use the CPU installation for accessibility. The GPU version can be installed by selecting one of the “CUDA” options on the installation page, then running the given pip-command in Python. This install command will run on both Mac and Windows (the Linux command is slightly different). When selecting the CPU option on Mac, the option reads “default”.

To verify whether torch installed correctly, the suggested test code from the installation page is run below, showing that the torch data-structure is functional.

import torch

x = torch.rand(5, 3)

print(x)tensor([[0.8598, 0.4388, 0.3275],

[0.9240, 0.3019, 0.8267],

[0.7712, 0.0991, 0.8446],

[0.2377, 0.6780, 0.7967],

[0.6226, 0.1598, 0.7692]])12.1.8 Implementation of Transformer Model

12.1.8.1 Data Importation

To highlight the uses and behaviors of the transformer model and attention weighting, we will use the same digit data used in the neural network implementation example. The model will aim to classify the given hand-drawn digits, and will focus on certain pixels in the 8x8 grid as more important in reaching a final output/conclusion as to the correct classification. This uses a simplified example generated from ChatGPT (fitting as the exact model demonstrated will ultimately create the code).

Below, the digit data is imported from the sklearn module, and is then split into training and testing subsets using the sklearn splitting package. Importantly, the train-test split is then passed to the torch datatype, which makes it usable in the transformer model. The “tensors” are simply another type of array which is used in AI architecture.

import torch

import torch.nn as nn

from sklearn.datasets import load_digits

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from torch.utils.data import TensorDataset, DataLoader

import matplotlib.pyplot as plt

import random

random.seed(12345)

X, y = load_digits(return_X_y=True)

X = StandardScaler().fit_transform(X)

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=42

)

X_train = torch.tensor(X_train, dtype=torch.float32).view(-1, 64, 1)

X_test = torch.tensor(X_test, dtype=torch.float32).view(-1, 64, 1)

y_train = torch.tensor(y_train, dtype=torch.long)

y_test = torch.tensor(y_test, dtype=torch.long)

train_loader = DataLoader(

TensorDataset(X_train, y_train),

batch_size=64,

shuffle=True)

test_loader = DataLoader(TensorDataset(X_test, y_test), batch_size=128)This code builds the transformer model implementation for this example. It uses 4 heads, where each head represents one type of interaction between the given queries and keys. For example, one head could compare the shade of a key pixel to the query pixel, where another head might compare proximity. It is unspecified what type of interaction the model chooses for each of the heads. d_model describes the number of values in each token vector. num_layers represents the number of attention layers used in the model, where each attention layer has multiple linear layers and normalization layers, where each layer has at least 32 nodes (specified in d_model).

class TinyVisionTransformer(nn.Module):

def __init__(

self,

d_model=32,

nhead=4,

num_layers=2,

num_classes=10,

seq_len=64

):

super().__init__()

self.embedding = nn.Linear(1, d_model)

self.pos_encoding = nn.Parameter(

torch.zeros(1, seq_len, d_model)

)

encoder_layer = nn.TransformerEncoderLayer(

d_model=d_model,

nhead=nhead,

batch_first=True

)

self.transformer = nn.TransformerEncoder(

encoder_layer, num_layers=num_layers

)

self.classifier = nn.Linear(

d_model, num_classes

)

def forward(self, x):

x = self.embedding(x) + self.pos_encoding[:, :x.size(1), :]

x = self.transformer(x)

x = x.mean(dim=1)

return self.classifier(x)Now we train the model, prioritizing using the GPU “cuda” method, and the CPU method if GPU is not available. It will successively train over multiple runs, lowering the loss value each time, using entropy to compare the predicted values to actual values. lr=1e-3 is the learning rate of the model.

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = TinyVisionTransformer().to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=1e-3)

criterion = nn.CrossEntropyLoss()

for epoch in range(5):

model.train()

total_loss = 0

for xb, yb in train_loader:

xb, yb = xb.to(device), yb.to(device)

optimizer.zero_grad()

preds = model(xb)

loss = criterion(preds, yb)

loss.backward()

optimizer.step()

total_loss += loss.item()

print(f"Epoch {epoch+1}, loss={total_loss/len(train_loader):.4f}")Epoch 1, loss=2.3664

Epoch 2, loss=2.3017

Epoch 3, loss=2.2538

Epoch 4, loss=2.1687

Epoch 5, loss=2.0673Then, the test accuracy is calculated as a decimal representing the proportion of successful classifications to total classifications.

model.eval()

correct = 0

with torch.no_grad():

for xb, yb in test_loader:

preds = model(xb.to(device)).argmax(dim=1)

correct += (preds.cpu() == yb).sum().item()

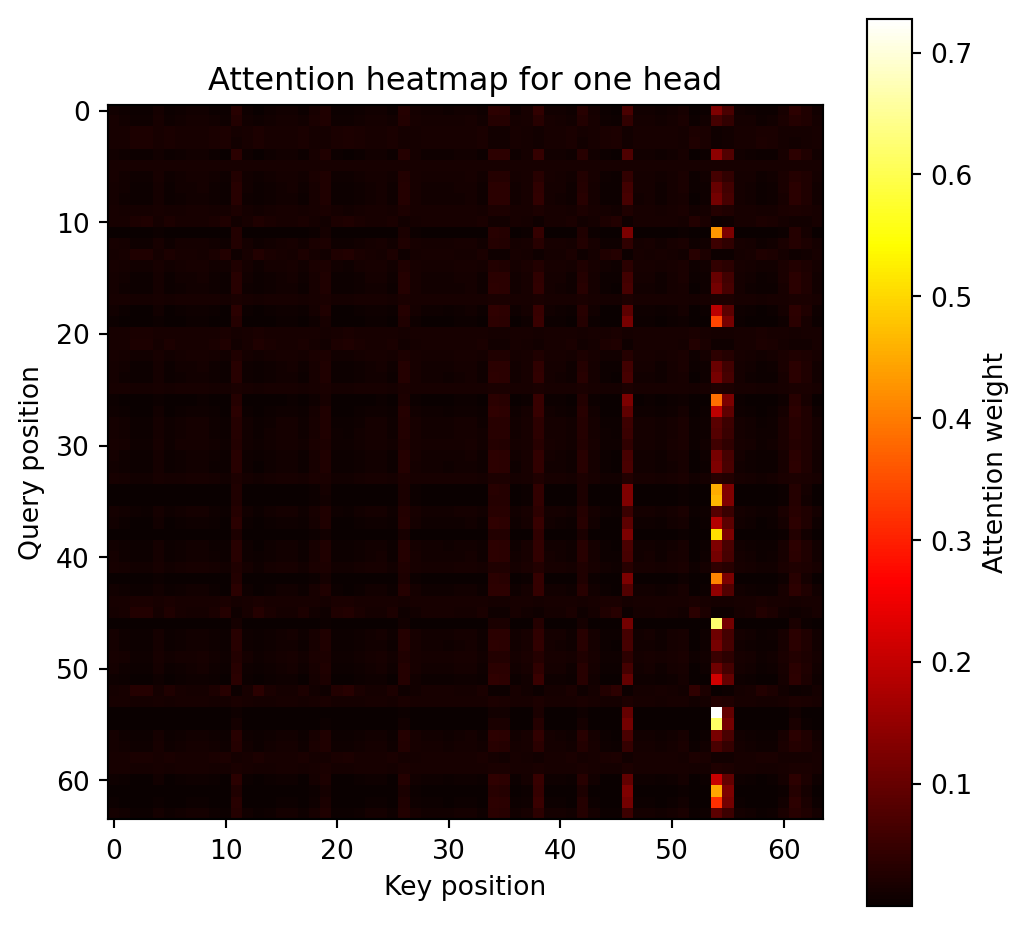

print(f"Test accuracy: {correct / len(y_test):.3f}")Test accuracy: 0.222Below is shown a figure of the attention scores between each of the 64 pixels (the query) to the same pixels (as a key). The figure represent a single “head” of the model. The tendency to show a vertical line represents the fact that certain pixels are important to classification no matter which pixel is queried.

encoder_layer = model.transformer.layers[0]

def get_attention_weights(layer, x):

with torch.no_grad():

attn_output, attn_weights = layer.self_attn(

x, x, x, need_weights=True, average_attn_weights=False

)

return attn_weights

sample_img = X_test[0:1].to(device) # (1, 64, 1)

embedded = model.embedding(sample_img) + model.pos_encoding[:, :64, :]

attn_weights = get_attention_weights(encoder_layer, embedded)

# First sample, first head

attn = attn_weights[0, 0].cpu().numpy() # shape: (64,64)

plt.figure(figsize=(6,6))

plt.imshow(attn, cmap='hot', interpolation='nearest')

plt.title("Attention heatmap for one head")

plt.xlabel("Key position")

plt.ylabel("Query position")

plt.colorbar(label="Attention weight")

plt.show()

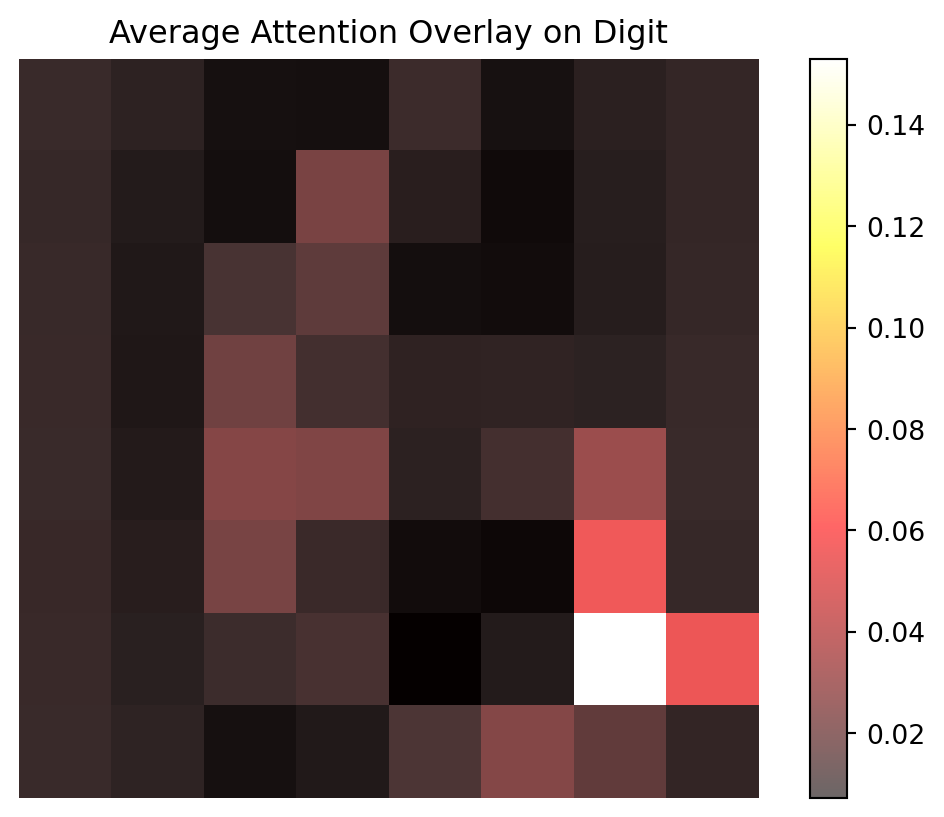

This graph overlays the heatmap from the previous graph onto an 8x8 graph like that of the digits. It averages out the attention scores for each query pixel which gives the overall importance of each pixel in determining which digit each image is classified as. The lighter pixels in this graph represent the vertical lines of high attention from the heatmap above.

attn_avg = attn.mean(axis=0) # average over query positions -> 64

attn_img = attn_avg.reshape(8,8)

plt.imshow(X_test[0].view(8,8).cpu().numpy(), cmap='gray')

plt.imshow(attn_img, cmap='hot', alpha=0.6)

plt.title("Average Attention Overlay on Digit")

plt.axis("off")

plt.colorbar()

plt.show()

12.1.9 Conclusion

Deep learning models are varied, and cutting edge, having only picked up speed in the past 20 years or so. Much of their surge in popularity and use can be attributed to the transformer-attention method, unveiled in 2017 by Google. This led to increased efficiency of DL models, far surpassing CNN and RNN models, allowing them to create the GPT models which are now seen in almost every aspect of industry and academics. The attention method assigns an attention weight to interactions between input queries and keys, and allows the model to efficiently focus on relevant data interactions. This can be applied to the image classification example above, extending the example already given in the neural network section.

12.1.10 Further Readings

12.1.10.1 History and Overview

History of Neural Networks and Deep Learning

Google paper (Attention is all You Need)

12.1.10.2 Implementation with Pytorch

Pytorch Package and Documentation

12.2 Neural Networks for Data with Temporal Dependence

Many real-world datasets are sequential, where earlier observations influence what happens later. Examples include electricity demand over hours, temperature across days, and stock prices through trading sessions. Such data exhibit temporal dependence, meaning that successive observations are not independent.

Traditional supervised learning models, such as linear regression and feedforward neural networks, treat each observation as if it were independent. When applied directly to time-ordered data, they fail to capture how information evolves through time. A prediction for one step does not reflect patterns that unfolded earlier.

To learn from sequential patterns, we need models that can remember what has already occurred and use that information to improve predictions. Neural networks designed for temporal dependence achieve this by introducing internal states that are updated as the sequence unfolds. The simplest such model is the recurrent neural network (RNN), which forms the basis for more advanced architectures such as long short-term memory (LSTM) and gated recurrent unit (GRU) networks.

12.2.1 Recurrent Neural Networks (RNNs)

To model data with temporal dependence, a neural network must be able to retain information about what has happened previously. A recurrent neural network (RNN) accomplishes this by maintaining an internal hidden state that evolves over time. The hidden state acts as a summary of all past inputs and is updated as new data arrive.

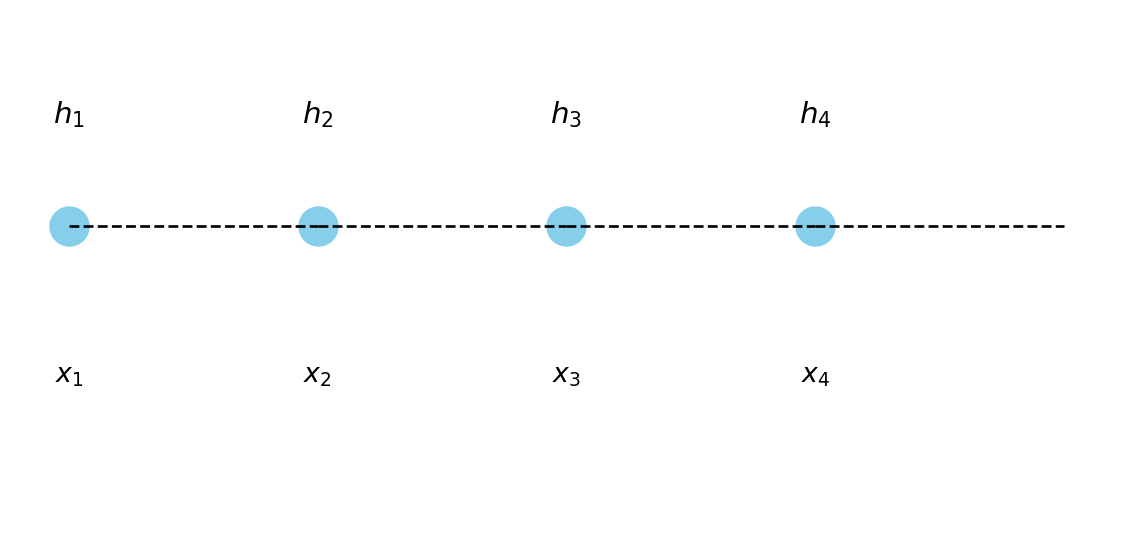

At each time step \(t\), an RNN receives an input vector \(x_t\) and produces a hidden state \(h_t\) according to

\[ h_t = \tanh(W_h h_{t-1} + W_x x_t + b_h), \]

where \(W_h\) and \(W_x\) are weight matrices and \(b_h\) is a bias term. The output at the same step can be expressed as

\[ \hat{y}_t = \sigma(W_y h_t + b_y), \]

with \(\sigma(\cdot)\) representing an activation or link function. Because \(h_t\) depends on \(h_{t-1}\), the network can in principle capture relationships across time.

The initial hidden state \(h_0\) must be specified before the sequence starts. In most applications, \(h_0\) is set to a vector of zeros with the same dimension as \(h_t\), allowing the network to begin without prior memory. This default works well because the recurrent updates quickly overwrite the initial state as new inputs arrive. In some advanced or stateful applications, \(h_0\) can instead be learned during training or carried over from the final state of a previous sequence, enabling the model to preserve continuity across batches.

Before training can begin, an objective function must be defined to measure how well the network predicts the target sequence. For a series of observations \(\{(x_t, y_t)\}_{t=1}^T\), the total loss is typically the sum of stepwise prediction errors, \[ \mathcal{L} = \sum_{t=1}^T \ell(y_t, \hat{y}_t), \] where \(\ell\) is a suitable loss such as mean squared error for regression or cross-entropy for classification. The gradients of \(\mathcal{L}\) with respect to the network parameters are then computed and used to update the weights through backpropagation through time.

Figure 12.1 illustrates how an RNN can be unrolled across time steps, showing that the same set of weights is reused at each step. The hidden state serves as a bridge between past and present inputs, allowing the network to accumulate information through time.

Training an RNN is done by backpropagation through time (BPTT), which unrolls the network over all time steps and applies gradient descent. However, when sequences are long, the repeated multiplication of gradients can lead to vanishing or exploding gradients. This makes it difficult for a standard RNN to learn long-term dependencies, limiting its ability to remember events far in the past.

In many applications, temporal dependence is only one part of the problem. Alongside the time-varying input \(x_t\), there may be additional covariates \(z\) that describe static or slowly changing characteristics, such as a station ID, region, or weather condition. These can be incorporated into an RNN by concatenating them with \(x_t\) at each time step or by feeding them into separate layers whose outputs are combined with the recurrent representation. In practice, the design depends on whether such covariates are constant across time or vary together with the sequence.

To address the limitations of standard RNNs, researchers developed architectures that explicitly control how information is remembered or forgotten. The most influential of these is the LSTM network, which introduces a structured memory cell and gating mechanisms to stabilize learning over longer sequences.

12.2.2 Long Short-Term Memory (LSTM)

The main limitation of a standard RNN is its inability to retain information over long sequences. During backpropagation through time, gradients tend to either vanish or explode, preventing effective learning of long-term dependencies. The Long Short-Term Memory (LSTM) network, proposed by Hochreiter and Schmidhuber (1997), was designed to overcome this problem.

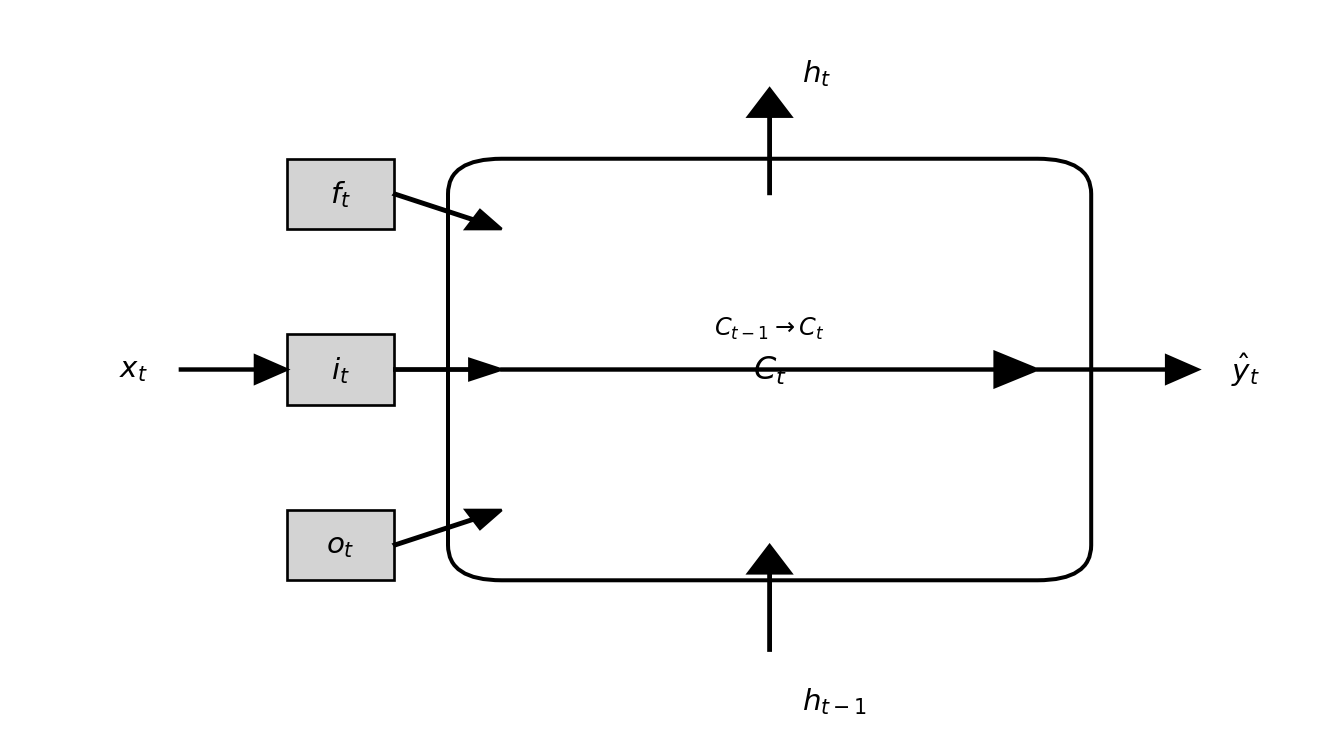

An LSTM introduces a separate cell state \(C_t\) that acts as a highway for information to flow across time steps, along with gating mechanisms that regulate what to remember and what to forget. The gates use sigmoid activations to produce values between 0 and 1, allowing the network to scale information rather than overwrite it.

The key update equations of an LSTM are

\[ \begin{aligned} f_t &= \sigma(W_f [h_{t-1}, x_t] + b_f), \\ i_t &= \sigma(W_i [h_{t-1}, x_t] + b_i), \\ \tilde{C}_t &= \tanh(W_C [h_{t-1}, x_t] + b_C), \\ C_t &= f_t \odot C_{t-1} + i_t \odot \tilde{C}_t, \\ o_t &= \sigma(W_o [h_{t-1}, x_t] + b_o), \\ h_t &= o_t \odot \tanh(C_t), \end{aligned} \]

where \(\odot\) denotes element-wise (Hadamard) multiplication and \(\sigma(\cdot)\) is the logistic sigmoid function. Each gate \(f_t\), \(i_t\), and \(o_t\) outputs values between 0 and 1 that determine how information flows through the cell.

The activation functions \(\tanh(\cdot)\) and \(\sigma(\cdot)\) play specific roles in the LSTM design. The sigmoid \(\sigma\) compresses values to the range \((0,1)\), making it suitable for gate control because it behaves like a smooth on–off switch. The hyperbolic tangent \(\tanh\) maps inputs to \((-1,1)\), allowing both positive and negative contributions to the cell state.

Other activation functions can in principle replace \(\tanh\), such as ReLU or Leaky ReLU, but this is uncommon in practice. ReLU may cause the cell state to grow without bound, and smooth symmetric activations like \(\tanh\) are generally more stable for recurrent updates. Some modern variants, such as the Peephole LSTM and GRU, adjust or simplify these activations, but the original combination of \(\sigma\) and \(\tanh\) remains the standard choice.

Each of the three gates in an LSTM serves a distinct role.

The forget gate \(f_t\) determines how much of the previous cell state \(C_{t-1}\) should be retained, effectively deciding what information to discard. The input gate \(i_t\) controls how much new information \(\tilde{C}_t\) enters the cell state, allowing the network to incorporate relevant updates. The output gate \(o_t\) regulates how much of the cell state is exposed as the hidden state \(h_t\), influencing the network’s prediction at the current step. Together, these gates maintain a balance between remembering long-term patterns and adapting to new signals. Figure Figure 12.2 illustrates how the three gates interact with the cell state and hidden states to manage information flow through time.

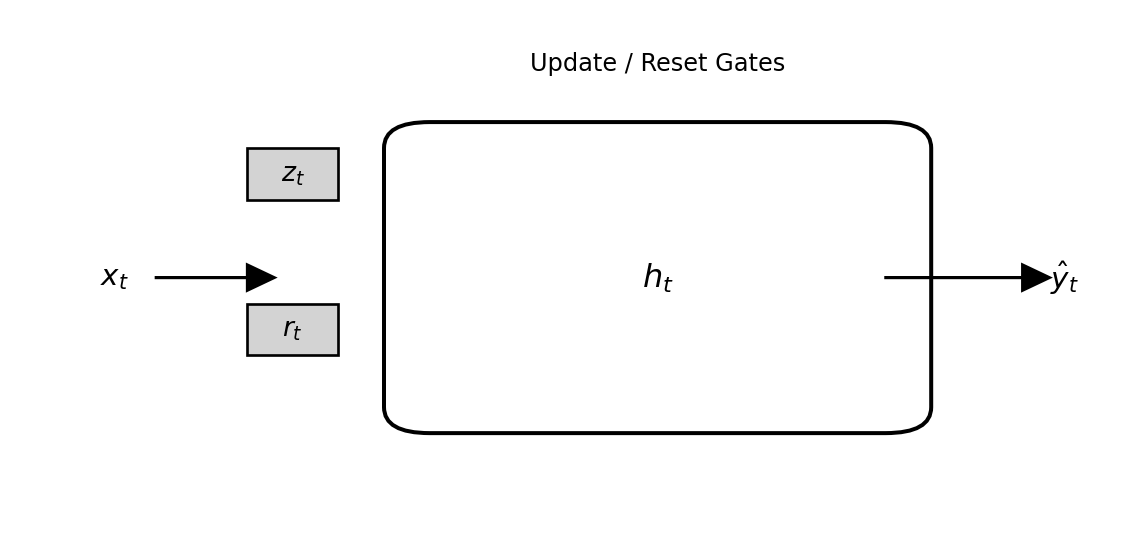

12.2.3 Gated Recurrent Unit (GRU)

The Gated Recurrent Unit (GRU), introduced by Cho et al. (2014), is a simplified variant of the LSTM that retains its ability to capture long-term dependencies while using fewer parameters. The GRU combines the roles of the input and forget gates into a single update gate and omits the separate cell state \(C_t\), relying only on the hidden state \(h_t\) to store information.

The GRU update equations are

\[ \begin{aligned} z_t &= \sigma(W_z [h_{t-1}, x_t] + b_z), \\ r_t &= \sigma(W_r [h_{t-1}, x_t] + b_r), \\ \tilde{h}_t &= \tanh(W_h [r_t \odot h_{t-1}, x_t] + b_h), \\ h_t &= (1 - z_t) \odot h_{t-1} + z_t \odot \tilde{h}_t, \end{aligned} \]

where \(z_t\) is the update gate and \(r_t\) is the reset gate. The update gate controls how much of the previous hidden state to keep, while the reset gate determines how strongly past information should influence the new candidate state \(\tilde{h}_t\).

The structure of a GRU cell is illustrated in Figure 12.3. Compared with an LSTM, the GRU is computationally simpler because it has no separate cell state and fewer matrix operations. Despite this simplification, GRUs often perform as well as LSTMs, especially when datasets are smaller or sequence lengths are moderate.

12.2.4 Example: Forecasting a Synthetic Sequential Signal (PyTorch)

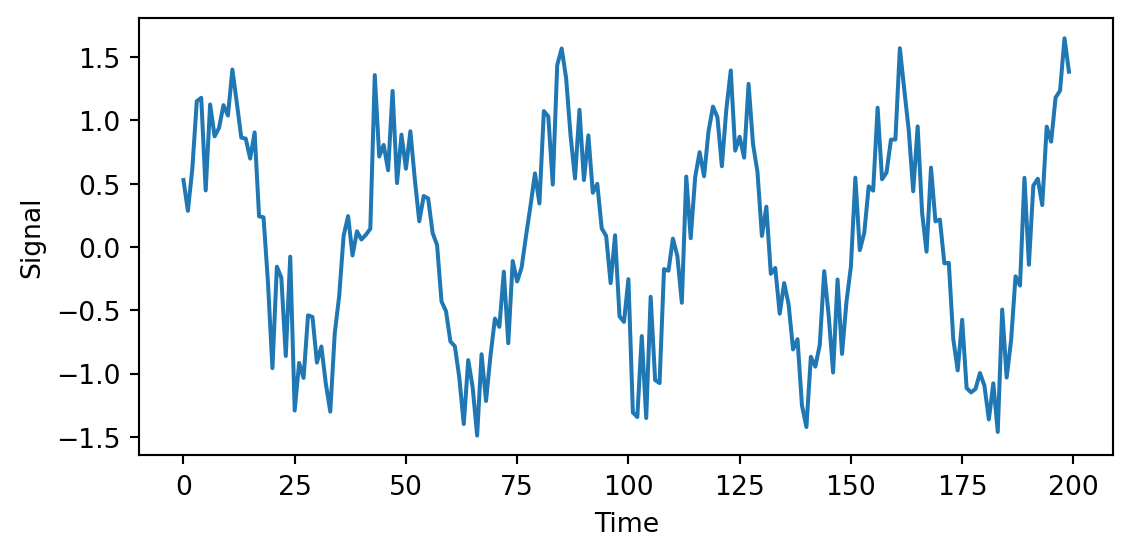

To compare recurrent architectures in a reproducible way, we use a synthetic sine-wave signal with random noise. This allows us to train RNN, LSTM, and GRU models side-by-side without large datasets or external dependencies.

12.2.4.1 Step 1. Generate the data

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

np.random.seed(0)

time = np.arange(0, 200)

signal = np.sin(time / 6) + 0.3 * np.random.randn(200)

df = pd.DataFrame({"time": time, "signal": signal})

plt.figure(figsize=(6, 3))

plt.plot(df["time"], df["signal"])

plt.xlabel("Time")

plt.ylabel("Signal")

plt.tight_layout()

plt.show()

12.2.4.2 Step 2. Prepare input sequences

Each training example uses the previous 20 observations to predict the next value.

from sklearn.preprocessing import MinMaxScaler

from sklearn.model_selection import train_test_split

import torch

from torch import nn

L = 20

scaler = MinMaxScaler()

scaled = scaler.fit_transform(df[["signal"]])

X, y = [], []

for t in range(L, len(scaled)):

X.append(scaled[t-L:t, 0])

y.append(scaled[t, 0])

X, y = np.array(X), np.array(y)

X = X.reshape(X.shape[0], L, 1)

# split and convert to tensors

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, shuffle=False)

X_train = torch.tensor(X_train, dtype=torch.float32)

y_train = torch.tensor(y_train, dtype=torch.float32).view(-1, 1)

X_test = torch.tensor(X_test, dtype=torch.float32)

y_test = torch.tensor(y_test, dtype=torch.float32).view(-1, 1)12.2.4.3 Step 3. Define recurrent models

class RecurrentModel(nn.Module):

def __init__(self, rnn_type="RNN", hidden_size=50):

super().__init__()

if rnn_type == "LSTM":

self.rnn = nn.LSTM(1, hidden_size, batch_first=True)

elif rnn_type == "GRU":

self.rnn = nn.GRU(1, hidden_size, batch_first=True)

else:

self.rnn = nn.RNN(1, hidden_size, batch_first=True)

self.fc = nn.Linear(hidden_size, 1)

def forward(self, x):

out, _ = self.rnn(x)

return self.fc(out[:, -1, :])12.2.4.4 Step 4. Train and evaluate

def train_model(model, X, y, epochs=50, lr=0.01):

criterion = nn.MSELoss()

optimizer = torch.optim.Adam(model.parameters(), lr=lr)

for _ in range(epochs):

optimizer.zero_grad()

loss = criterion(model(X), y)

loss.backward()

optimizer.step()

return loss.item()

def predict(model, X):

model.eval()

with torch.no_grad():

return model(X).numpy()

models = {

"RNN": RecurrentModel("RNN"),

"LSTM": RecurrentModel("LSTM"),

"GRU": RecurrentModel("GRU")

}

for name, m in models.items():

final_loss = train_model(m, X_train, y_train)

print(f"{name} final training loss: {final_loss:.5f}")

y_preds = {name: predict(m, X_test) for name, m in models.items()}RNN final training loss: 0.01512

LSTM final training loss: 0.02947

GRU final training loss: 0.0139812.2.4.5 Step 5. Compare RMSE and MAE

from sklearn.metrics import mean_squared_error, mean_absolute_error

def metrics(y_true, y_pred):

rmse = np.sqrt(mean_squared_error(y_true, y_pred))

mae = mean_absolute_error(y_true, y_pred)

return rmse, mae

for name, y_hat in y_preds.items():

rmse, mae = metrics(y_test, y_hat)

print(f"{name:5s} – RMSE: {rmse:.4f}, MAE: {mae:.4f}")RNN – RMSE: 0.1165, MAE: 0.0984

LSTM – RMSE: 0.1844, MAE: 0.1580

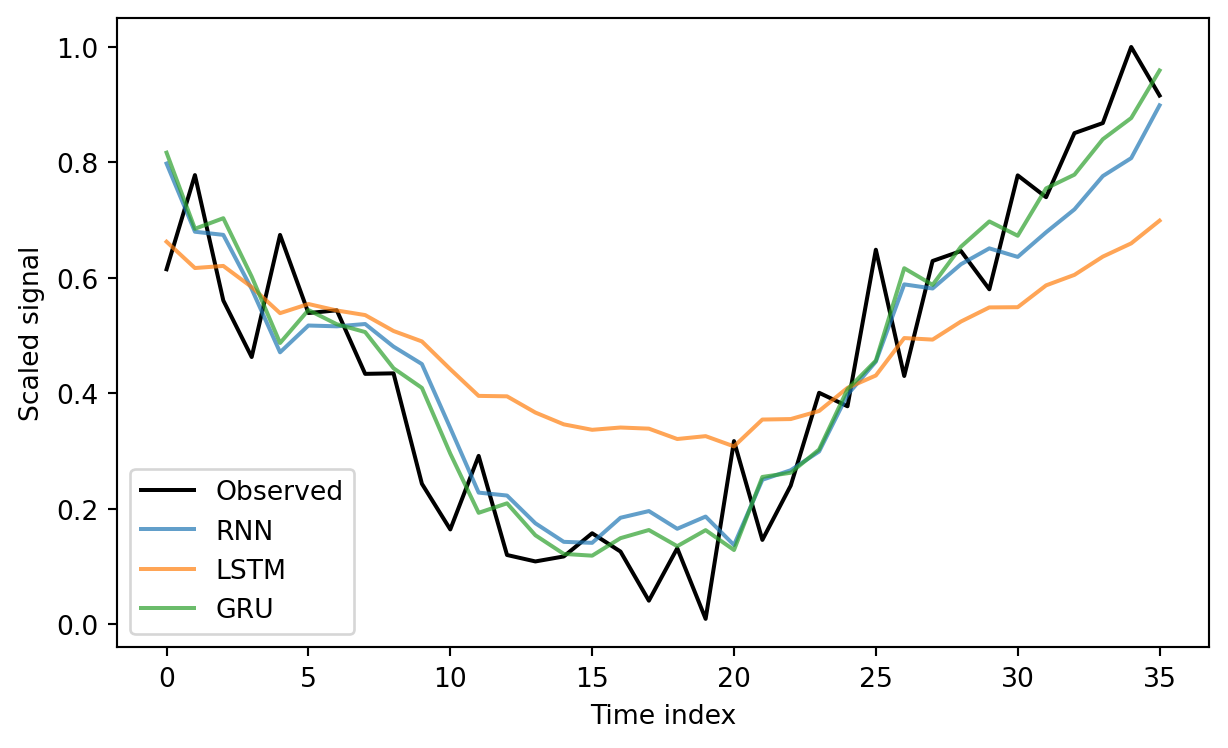

GRU – RMSE: 0.1075, MAE: 0.087012.2.4.6 Step 6. Visual comparison

plt.figure(figsize=(6.5, 4))

plt.plot(y_test[:100], label="Observed", color="black")

for name, y_hat in y_preds.items():

plt.plot(y_hat[:100], label=name, alpha=0.7)

plt.xlabel("Time index")

plt.ylabel("Scaled signal")

plt.legend()

plt.tight_layout()

plt.show()

12.2.4.7 Discussion

All three networks capture the oscillatory pattern, but the vanilla RNN has difficulty preserving phase alignment when the sequence is long. Both the LSTM and GRU learn the dependency structure more reliably. The GRU reaches nearly the same accuracy as the LSTM while training faster, thanks to its simpler gating design.

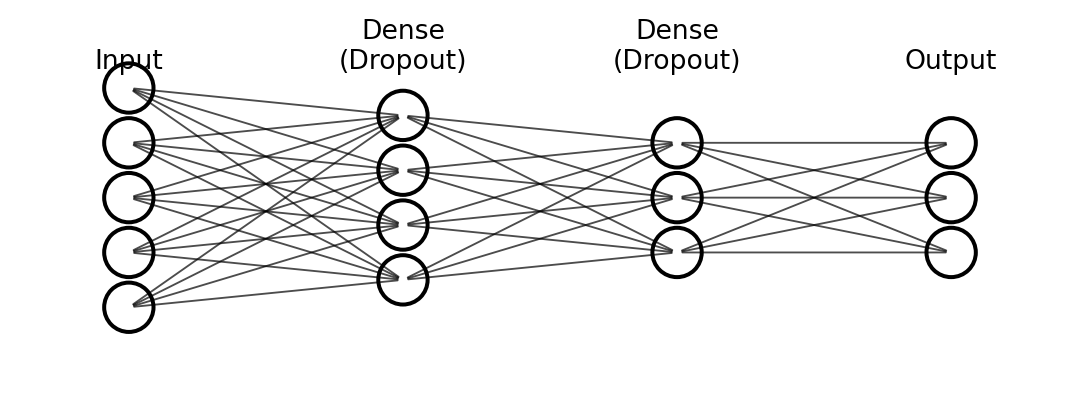

12.3 Convolutional Neural Network (CNN)

This section was prepared by Michael Agostino.

12.3.1 Introduction

We will use two different neural networks to classify handwritten digits.

The first network is an MLP:

A Multi-Layer Perceptron (MLP) is a feed-forward artificial neural network composed of multiple layers of interconnected neurons.

Next, we will extend these concepts to build a CNN:

A Convolutional Neural Network (CNN) is also a feed-forward network. They use convolutional layers that make image classification tasks more efficient and natural.

Implementation and Data:

Both networks will be implemented using Keras, which runs on top of TensorFlow.

We will train and evaluate both models on the MNIST database (Modified National Institute of Standards and Technology database), which provides 70,000 grayscale images of handwritten digits. Each image is 28 × 28 pixels.

Image Representation:

Each pixel is represented by a single integer value:

0 represents pure black

255 represents pure white

Values in between (e.g., 128) represent intermediate shades of gray

Thus, each image is encoded as a set of 28 × 28 = 784 numbers.

12.3.2 Data Preparation

import os, multiprocessing, numpy as np, tensorflow as tf

from tensorflow import keras

multiprocessing.set_start_method("spawn", force=True)

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

np.random.seed(42)

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data()

x_train = x_train.astype("float32") / 255.0

x_test = x_test.astype("float32") / 255.0

print(f"x_train: {x_train.shape}, x_test: {x_test.shape}")2025-12-13 18:24:33.302488: I tensorflow/core/platform/cpu_feature_guard.cc:210] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

To enable the following instructions: AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.x_train: (60000, 28, 28), x_test: (10000, 28, 28)Each pixel value is scaled to the range 0–1.

Data is pre-split:

x_train, y_train- 60 000 images.x_test, y_test- 10 000 images.

12.3.3 MLP structure

# define MLP

from tensorflow.keras import layers

model = keras.Sequential([

layers.Dense(256, activation="relu", input_shape=(784,)),

layers.Dropout(0.3),

layers.Dense(128, activation="relu"),

layers.Dropout(0.3),

layers.Dense(10, activation="softmax")

])

model.compile(optimizer="adam",

loss="sparse_categorical_crossentropy",

metrics=["accuracy"])

model.summary()/Users/junyan/work/teaching/ids-f25/ids-f25/.ids-f25-py3.12/lib/python3.12/site-packages/keras/src/layers/core/dense.py:95: UserWarning:

Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead.

Model: "sequential"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ │ dense (Dense) │ (None, 256) │ 200,960 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dropout (Dropout) │ (None, 256) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_1 (Dense) │ (None, 128) │ 32,896 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dropout_1 (Dropout) │ (None, 128) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_2 (Dense) │ (None, 10) │ 1,290 │ └─────────────────────────────────┴────────────────────────┴───────────────┘

Total params: 235,146 (918.54 KB)

Trainable params: 235,146 (918.54 KB)

Non-trainable params: 0 (0.00 B)

A dense (fully-connected) layer in Keras means every neuron receives input from every neuron in the previous layer.

The first dense layer has 256 neurons; input_shape=(784,) tells Keras each image supplies 784 values.

For every neuron \(j\) \((j = 1 … 256)\) we compute the weighted sum.

\(z_j = ( \sum_{i=1}^{784} x_i W_{i,j} ) + b_j\)

Where:

\(x_i\) is the normalised pixel value,

\(W_{i,j}\) is the (initially random) weight connecting pixel \(i\) to neuron \(j\),

\(b_j\) is the bias of neuron \(j\).

After the weighted sum we apply the ReLU activation

\({output}_j = relu(z_j) = max(0, z_j)\)

so the layer returns a vector of 256 non-negative numbers, introducing non-linearity rather than normalising them to [0, 1].

A second dense hidden layer (also 256 units) follows, and then the output layer.

The output layer contains 10 neurons—one per digit class—followed by the softmax activation. Softmax turns the 10 raw scores into probabilities:

Each probability lies between 0 and 1.

The probabilities sum to 1 across the 10 classes.

Thus the final vector gives an easily interpretable confidence value for each digit 0–9.

12.3.4 MLP Training

import os, keras

mlp_path = "./.keras/mlp_mnist_model.keras"

if os.path.exists(mlp_path):

mlp = keras.models.load_model(mlp_path)

hist = None

else:

x_flat = x_train.reshape(x_train.shape[0], -1)

hist = model.fit(x_flat, y_train,

epochs=10, batch_size=32,

validation_split=0.1, verbose=0)

model.save(mlp_path)

mlp = modelNote: In this network, each image, for both training and prediction is treaded as one-dimensional array of grayscale pixes.

This is a supervised learning technique, meaning we already have each handwritten digit correctly labeled as a number. We need a loss funciton, For this application, we use sparse_categorical_crossentropy, which has the formula for a single sample:

\(L = -\log(p_y)\)

This loss function measures how different our predicted result is from the expected result – essentially, the error.

A higher loss means our model is further from the correct answer.

A lower loss means our model is closer to the correct answer.

Note: Since we use softmax as our output layer activation function, the model outputs a probability distribution. The sparse_categorical_crossentropy function is designed to work with integer labels (like 2) – it internally converts the true label to a one-hot format, then computes the cross-entropy loss. This is more memory-efficient than storing one-hot vectors for large datasets.

For example, if the expected digit is 2 and our untrained model outputs:

\[ \begin{pmatrix} \text{Digit } 0 = 0.2 \\ \text{Digit } 1 = 0.1 \\ \text{Digit } 2 = 0.3 \\ \text{Digit } 3 = 0.05 \\ ... \end{pmatrix} \]

Expected value:

\[ \begin{pmatrix} \text{Digit } 0 = 0 \\ \text{Digit } 1 = 0 \\ \text{Digit } 2 = 1 \\ \text{Digit } 3 = 0 \\ ... \end{pmatrix} \]

The loss for a single image would be calculated using only the probability assigned to the true class (digit 2): \(L = -log(0.3) = 0.5229\). If the model were more confident and output 0.9 for digit 2, the loss would be much lower: \(L = -log(0.9) = 0.0458\).

During training, this loss is computed for each of the 32 images in a batch, then averaged to produce the cost. This single number tells us how poorly the model is performing overall.

The cost is optimized throught the process of backpropagation, the optimizer used in this example is ‘Adam’.

batch_size=32 and epochs=10 tell Keras how to slice and repeat the 90 %-split training subset:

Batch (mini-batch)

Every 32 samples the gradients are computed and the weights are updated once.

If the training subset has N examples, one epoch contains N/32 such weight updates.

Epoch

The complete data set is gone through once.

The whole process repeats 10 times, each time starting with the data already shuffled by Keras.

So, across the 10 epochs the network will see every training point 10 times, and the weights will be updated roughly \(10 \cdot (N / 32)\) times in total.

12.3.5 Evaluating the MLP Model

# evaluate & plot MLP history

import matplotlib.pyplot as plt

x_flat_test = x_test.reshape(x_test.shape[0], -1)

test_loss, test_acc = mlp.evaluate(x_flat_test, y_test, verbose=0)

print(f"MLP Test accuracy: {test_acc:.4f}")

if hist:

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 4))

ax1.plot(hist.history['accuracy'], label='train')

ax1.plot(hist.history['val_accuracy'], label='val')

ax1.set_title("MLP accuracy"); ax1.legend()

ax2.plot(hist.history['loss'], label='train')

ax2.plot(hist.history['val_loss'], label='val')

ax2.set_title("MLP loss"); ax2.legend()

plt.show()MLP Test accuracy: 0.9828Note This graph will only render if you have trained the model yourself.

accuracy / loss: how well the model learns the 90 % subset it is trained on.

val_accuracy / val_loss: how well the model generalises to unseen data during training.

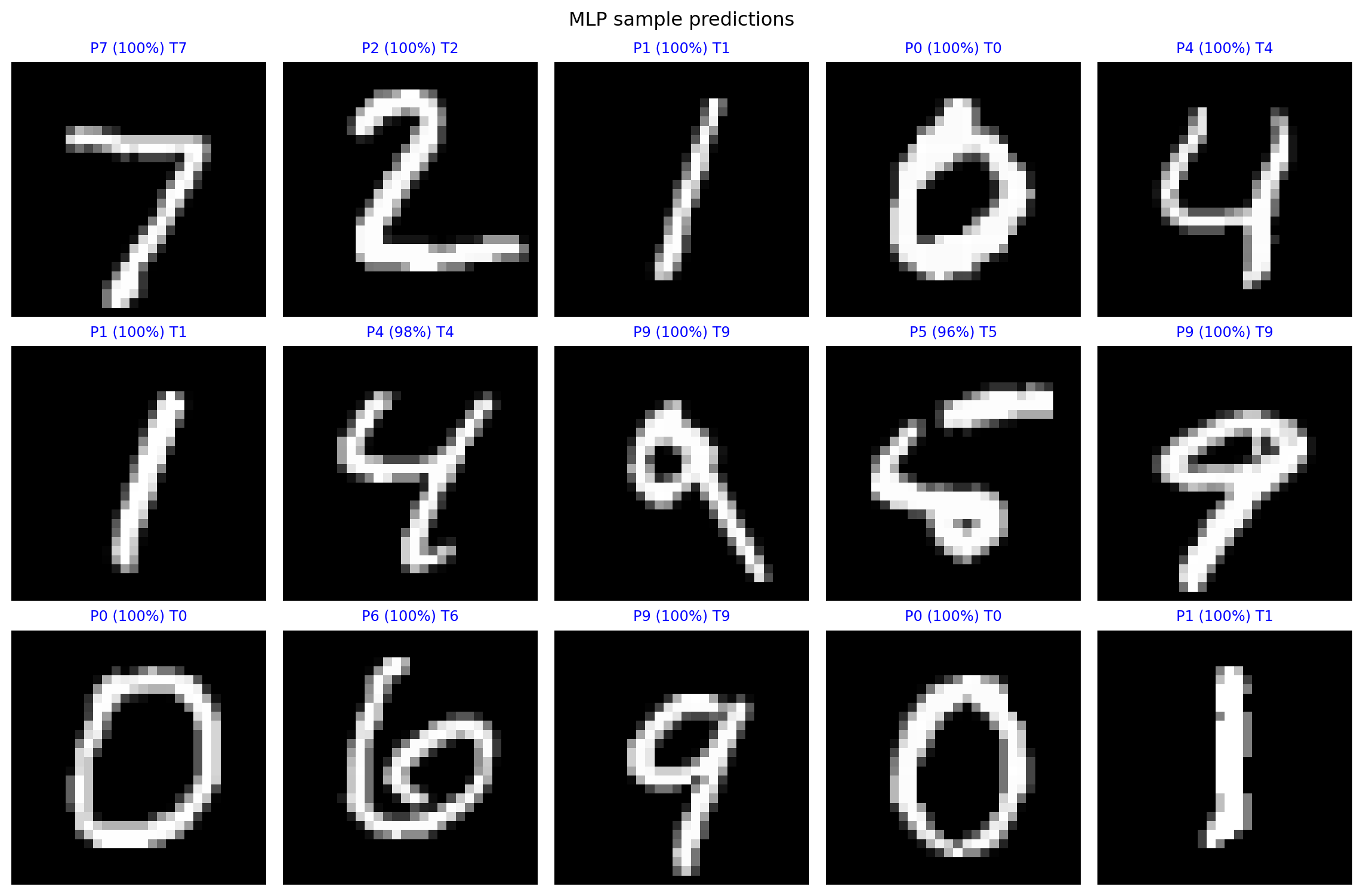

# MLP predictions grid

preds = mlp.predict(x_flat_test, verbose=False)

fig, axes = plt.subplots(3, 5, figsize=(12, 8))

axes = axes.ravel()

for i in range(15):

ax = axes[i]

ax.imshow(x_test[i], cmap='gray')

p, t = preds[i].argmax(), y_test[i]

c = 'blue' if p == t else 'red'

conf = preds[i].max() * 100

ax.set_title(f"P{p} ({conf:.0f}%) T{t}", color=c, fontsize=9)

ax.axis('off')

plt.suptitle("MLP sample predictions"); plt.tight_layout(); plt.show()

The model is strong enough to classify images within this very simple dataset, with a large amount of training data.

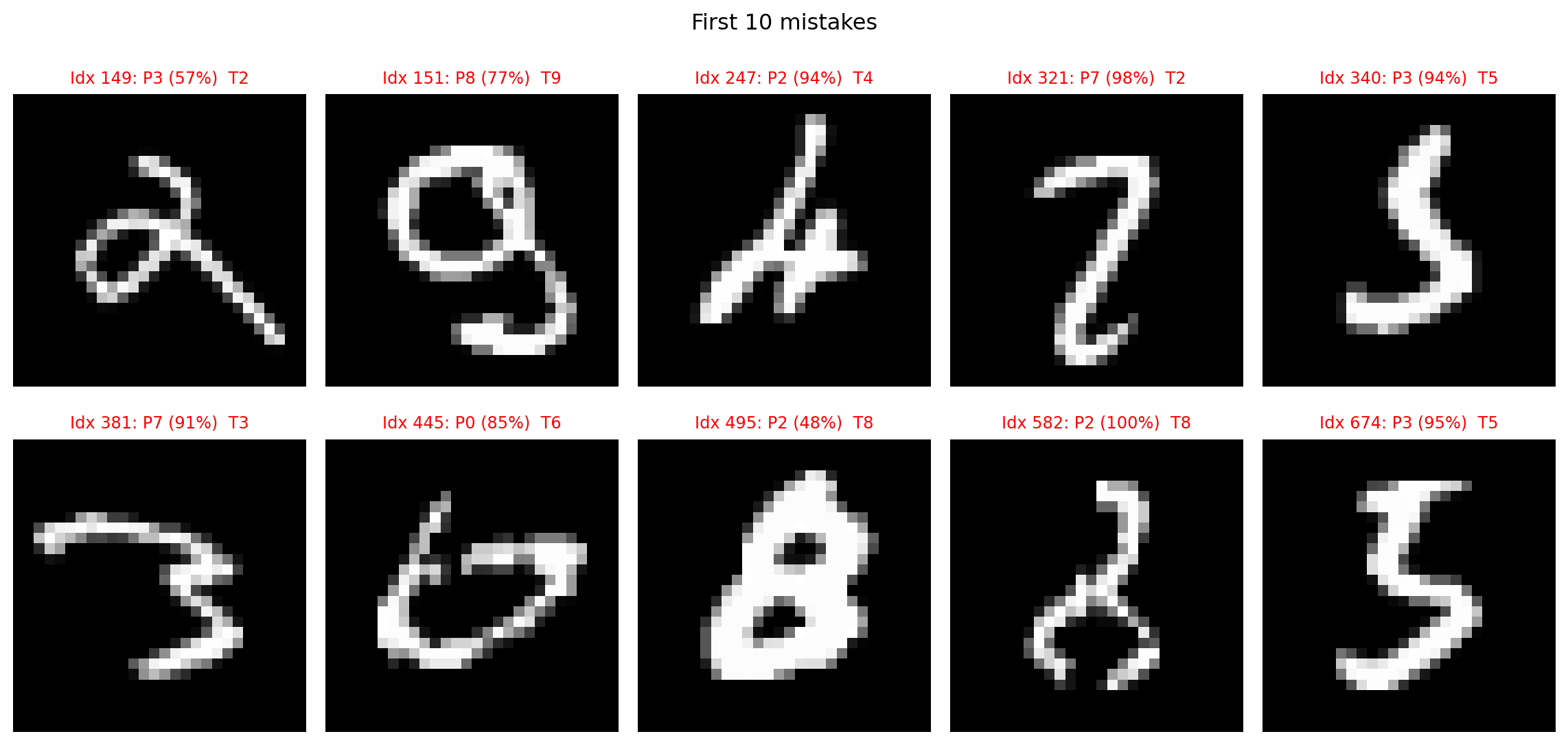

wrong_idx = np.where(np.argmax(preds, axis=1) != y_test)[0]

print(f"Total wrong: {len(wrong_idx)} / {len(y_test)}")

N = 10

fig, axes = plt.subplots(2, 5, figsize=(12, 6)); axes = axes.ravel()

for k, idx in enumerate(wrong_idx[:N]):

ax = axes[k]

ax.imshow(x_test[idx], cmap='gray')

p = preds[idx].argmax(); t = y_test[idx]

conf = preds[idx].max()*100

ax.set_title(f"Idx {idx}: P{p} ({conf:.0f}%) T{t}", color='red', fontsize=9)

ax.axis('off')

plt.suptitle("First 10 mistakes"); plt.tight_layout(); plt.show()Total wrong: 172 / 10000

Many of these incorrect predictions are human readable, for the most part.

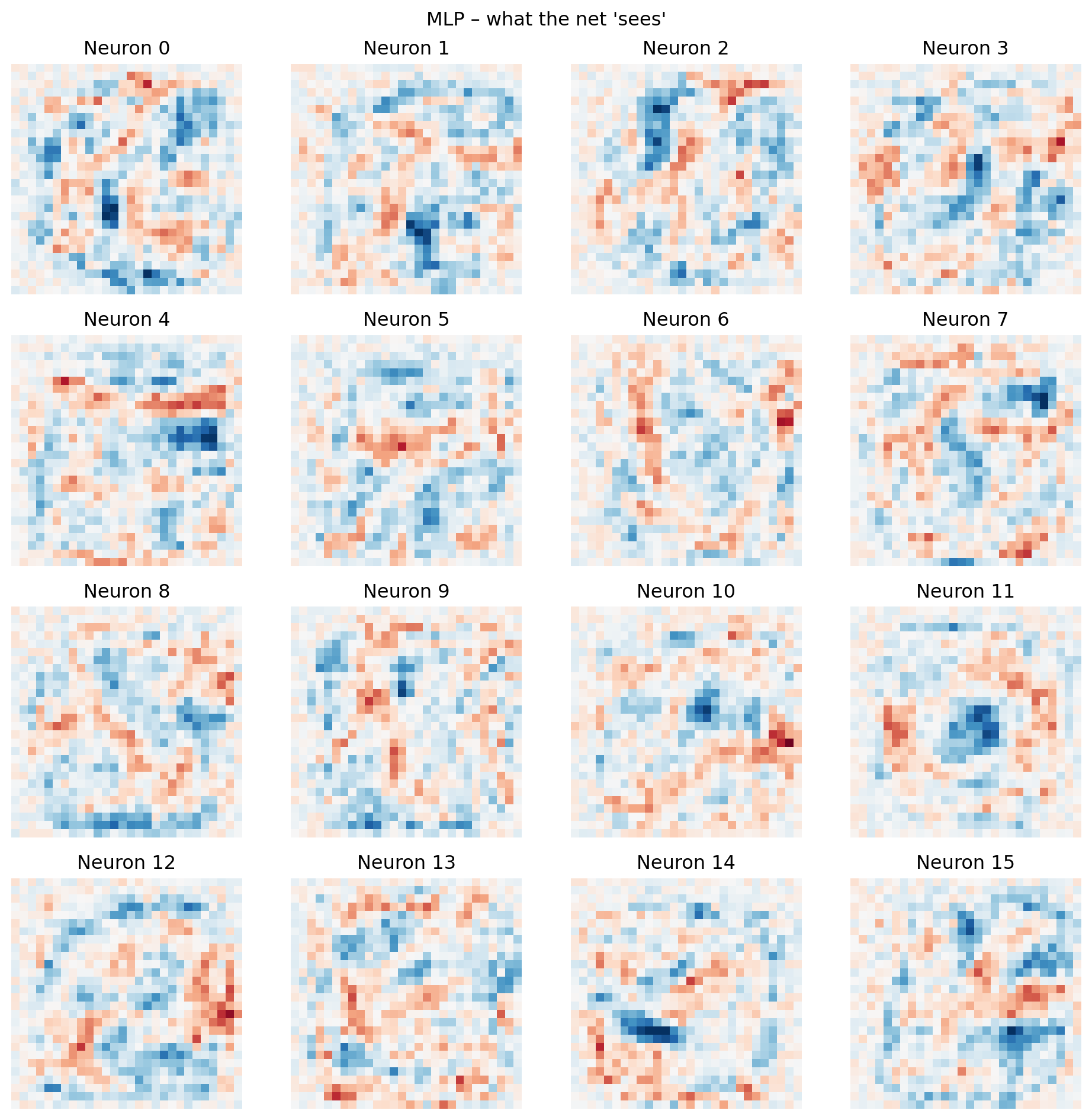

12.3.6 MLP Weight visualization

Each of the 256 neurons has 784 weights (one per input pixel) We can reshape each neuron’s weight vector back into a 28x28 image.

We are essentially placing each weight back into the spatial location of the input pixel it originated from.

So, the value (and color) at \((x, y)\) in the visualization corresponds to the weight connecting the pixel at \((x, y)\) in the original image to this specific hidden neuron.

Red means this weight contributes negatively to the activation function.

Blue means that the weight contributes positively to the activation function.

# MLP first-layer weights

w, _ = mlp.layers[0].get_weights() # shape (784, 256)

plt.figure(figsize=(10, 10))

for i in range(16):

plt.subplot(4, 4, i + 1)

plt.imshow(w[:, i].reshape(28, 28), cmap='RdBu_r', vmin=-.5, vmax=.5)

plt.title(f'Neuron {i}'); plt.axis('off')

plt.suptitle("MLP – what the net 'sees'"); plt.tight_layout(); plt.show()

MLP does a good job of classifying images in this simple dataset. These images are all centered, simple, 28x28 and there are only 10 different possibilities.

However, when we visualize the weights of the hidden neurons, they often appear random or noisy, not like recognizable visual features (edges, shapes, etc.).

This suggests the model doesn’t “see” in a human-like way. Instead of understanding visual structure, the MLP learns complex, non-linear statistical correlations between individual pixels and classes. It effectively separates the data in a high-dimensional space, even if its internal representation isn’t directly interpretable to us. It’s found a statistically sufficient solution, rather than building an intuitive visual model.

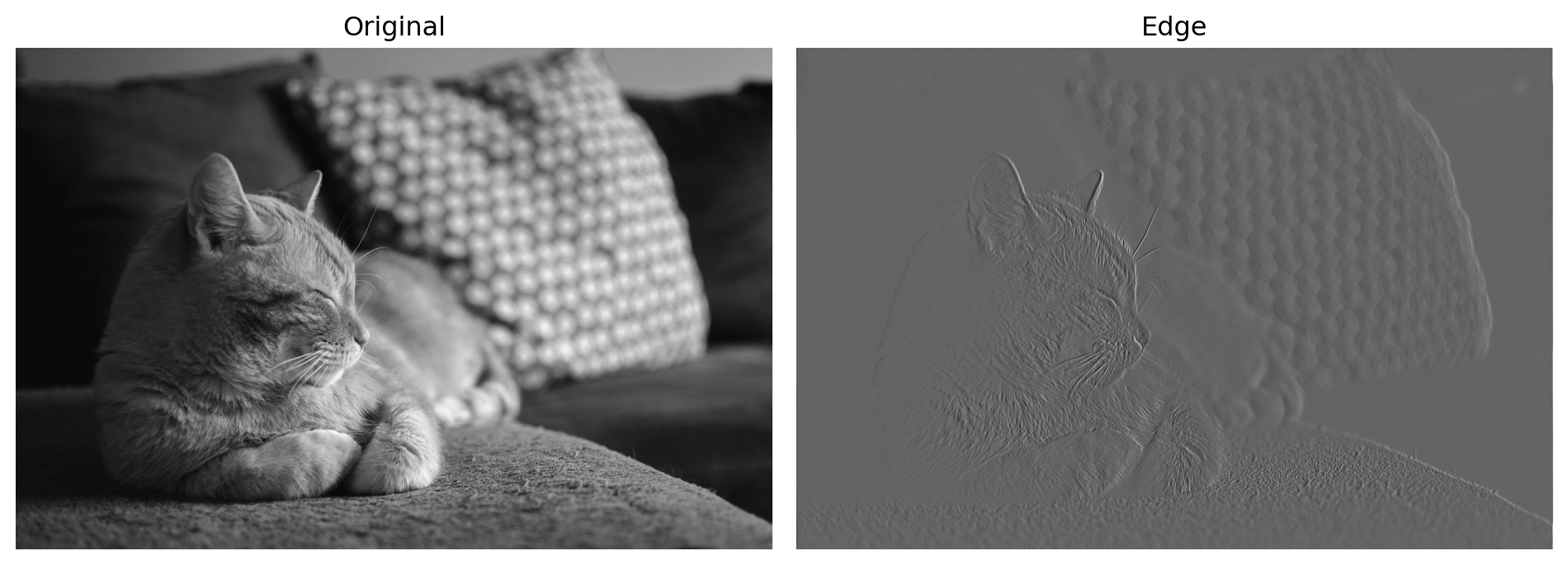

12.3.7 Introduction to convolution

A convolution is a fundamental mathematical operation that combines two functions to produce a third, effectively transforming one function based on the shape of another. In the context of image processing, it’s a powerful tool used to filter, detect features, or highlight specific patterns within an input image.

For our purposes, we can think of an image as a large 2D array of numerical values, where each value represents the brightness or color intensity of a single pixel.

The convolution operation involves sliding a smaller 2D array, called a kernel (or filter), over the entire image. At each position, the kernel interacts with the underlying image pixels to compute a new pixel value for the output image.

For example: \[ \text{Kernel} = \begin{bmatrix} 1/9 & 1/9 & 1/9 \\ 1/9 & 1/9 & 1/9 \\ 1/9 & 1/9 & 1/9 \end{bmatrix} \]

We could look at a 9x9 region around a pixel and apply this kernel using this formula:

\[ \text{Output}(i, j) = \sum_{m} \sum_{n} \text{Kernel}(m, n) \times \text{Image}(i+m, j+n) \]

Where:

\((i, j)\) = The coordinates of the current pixel being calculated in the output image.

\((m, n)\) = Offsets within the kernel, typically relative to its center. If using your

m, nas positive indices from 0, then thei+m, j+nform is common. (A small note oni+m, j+nvsi-m, j-n: The standard mathematical definition of convolution usesi-m, j-n. However, in deep learning, the operation is often implemented as a “cross-correlation” which usesi+m, j+n, but still referred to as convolution. Both forms achieve similar filtering, but the deep learning convention is usuallyi+m, j+n).\(\text{Kernel}(m, n)\) = The value of the kernel at its offset position \((m, n)\).

\(\text{Image}(i+m, j+n)\) = The pixel value from the input image located at the position \((i+m, j+n)\), which corresponds to the kernel’s current overlap.

We are essentially replacing each pixel in an image with the average of the surrounding pixels, using this kernal.

from scipy import signal

from PIL import Image

img = Image.open("./images/cat.jpg").convert('L')

arr = np.array(img, dtype=float)

blur_kernel = np.array([[1, 1, 1],

[1, 1, 1],

[1, 1, 1]])/9

blur = signal.convolve2d(arr, blur_kernel, mode='same')

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(10, 4))

ax1.imshow(arr, cmap='gray'); ax1.set_title('Original'); ax1.axis('off')

ax2.imshow(blur, cmap='gray'); ax2.set_title('Blur'); ax2.axis('off')

plt.tight_layout(); plt.show()

What if we used a different kernel?

\[ \text{Kernel} = \begin{bmatrix} -1 & 0 & 1 \\ -2 & 0 & 2 \\ -1 & 0 & 1 \end{bmatrix} \]

edge_kernel = np.array([[-1, 0, 1],

[-2, 0, 2],

[-1, 0, 1]])

edge = signal.convolve2d(arr, edge_kernel, mode='same')

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(10, 4))

ax1.imshow(arr, cmap='gray'); ax1.set_title('Original'); ax1.axis('off')

ax2.imshow(edge, cmap='gray'); ax2.set_title('Edge'); ax2.axis('off')

plt.tight_layout(); plt.show()

We can use kernel to “bring out” certain parts of an image, like a filter. In general a section of an image that is more bright is in higer accordance with the kernel (matches its pattern), and the reverse for darker spots.

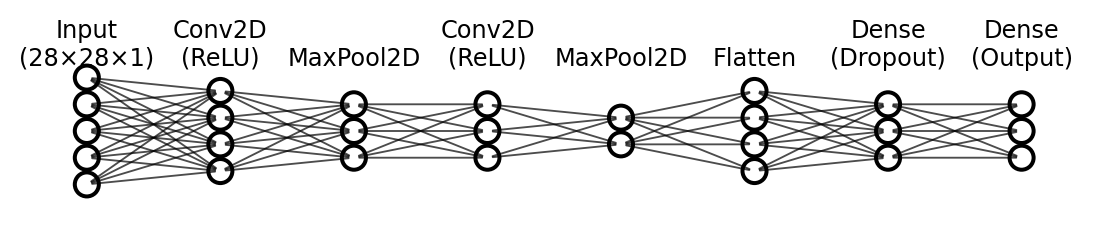

12.3.8 CNN Structure

# define CN

cnn = keras.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Flatten(),

layers.Dropout(0.5),

layers.Dense(128, activation='relu'),

layers.Dropout(0.5),

layers.Dense(10, activation='softmax')

])

cnn.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

cnn.summary()/Users/junyan/work/teaching/ids-f25/ids-f25/.ids-f25-py3.12/lib/python3.12/site-packages/keras/src/layers/convolutional/base_conv.py:113: UserWarning:

Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead.

Model: "sequential_1"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ │ conv2d (Conv2D) │ (None, 26, 26, 32) │ 320 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ max_pooling2d (MaxPooling2D) │ (None, 13, 13, 32) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ conv2d_1 (Conv2D) │ (None, 11, 11, 64) │ 18,496 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ max_pooling2d_1 (MaxPooling2D) │ (None, 5, 5, 64) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ flatten (Flatten) │ (None, 1600) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dropout_2 (Dropout) │ (None, 1600) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_3 (Dense) │ (None, 128) │ 204,928 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dropout_3 (Dropout) │ (None, 128) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_4 (Dense) │ (None, 10) │ 1,290 │ └─────────────────────────────────┴────────────────────────┴───────────────┘

Total params: 225,034 (879.04 KB)

Trainable params: 225,034 (879.04 KB)

Non-trainable params: 0 (0.00 B)

In a convolutional neural network, our weights are represented by the elements in a kernel. In the first layer we have 32 3x3 kernels. Each 3x3 kernel contains 9 weights, so in total that is 288 weights in our first layer (32 × 9 = 288).

At each position during convolution:

\(z(x, y) = \sum_{i=1}^{k} w_i \cdot a_i + b\)

This computes the pre-activation value by summing the kernel weights \(w_i\) multiplied by the corresponding input pixel values \(a_i\), plus a bias \(b\).

The full convolution operation:

\(Z = W * A + b\)

Where \(*\) denotes convolution. This applies the dot product at every spatial position across the input, producing a feature map \(Z\).

The activation in the first layer:

\(A_{\text{out}} = \text{ReLU}(Z) = \max(0, Z)\)

In the first layer, \(a_i\) are the raw pixel values (0–1) from the input image. ReLU is applied element-wise to the entire feature map.

After each kernel is applied to the input image, producing a feature map we apply max pooling. This step uses a 2x2 kernel which sets the current output pixel to whichever pixel in the corresponding 2x2 input area has the highest value.

\[ \text{Output} = \max\begin{pmatrix} 1 & 2 \\ 3 & 4 \end{pmatrix} = 4 \]

Max pooling reduces the spatial dimensions by half, while preserving the features that the neural network has learned to be important.

Note For these max pooling layers there are no weights to update.

There is another convolution layer, which produces even more kernels and feature maps.

The purpose of these convolution and pooling layers is to continually extract features that define a number or image in general. Early layers learn simple features like edges, corners, and diagonal lines. After pooling reduces resolution, subsequent layers build on these to learn more complex and larger-scale features (like curves, shapes, or patterns).

This hierarchical approach allows the network to progressively abstract the image into increasingly meaningful representations.

After these convolution layers, we flatten the resulting feature maps into a 1D vector and feed it to a fully connected MLP. The MLP uses these learned features to make the final classification decision.

12.3.9 CNN Training and Results

# train / load CNN

cnn_path = "./.keras/cnn_mnist_model.keras"

if os.path.exists(cnn_path):

cnn = keras.models.load_model(cnn_path)

hist_cnn = None

else:

x_cnn = x_train.reshape(-1, 28, 28, 1)

hist_cnn = cnn.fit(x_cnn, y_train,

epochs=10, batch_size=32,

validation_split=0.1, verbose=0)

cnn.save(cnn_path)In this network, we keep the images as 28x28 greyscale images. The 1 means that there is one channel of color.

This is different from MLP, the pixel data per images is not flattened, it is preserved.

Now we train the model, the process is conceptually the same as MLP, we use the same optimizer and loss function. Remeber we the process of backpropagation will ultimately generate kernals in the propagation layers.

# evaluate & plot CNN history

x_cnn_test = x_test.reshape(-1, 28, 28, 1)

test_loss, test_acc = cnn.evaluate(x_cnn_test, y_test, verbose=0)

print(f"CNN Test accuracy: {test_acc:.4f}")

if hist_cnn:

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 4))

ax1.plot(hist_cnn.history['accuracy'], label='train')

ax1.plot(hist_cnn.history['val_accuracy'], label='val')

ax1.set_title("CNN accuracy"); ax1.legend()

ax2.plot(hist_cnn.history['loss'], label='train')

ax2.plot(hist_cnn.history['val_loss'], label='val')

ax2.set_title("CNN loss"); ax2.legend()

plt.show()CNN Test accuracy: 0.9909Note This plot will only show if you have trained the model yourself.

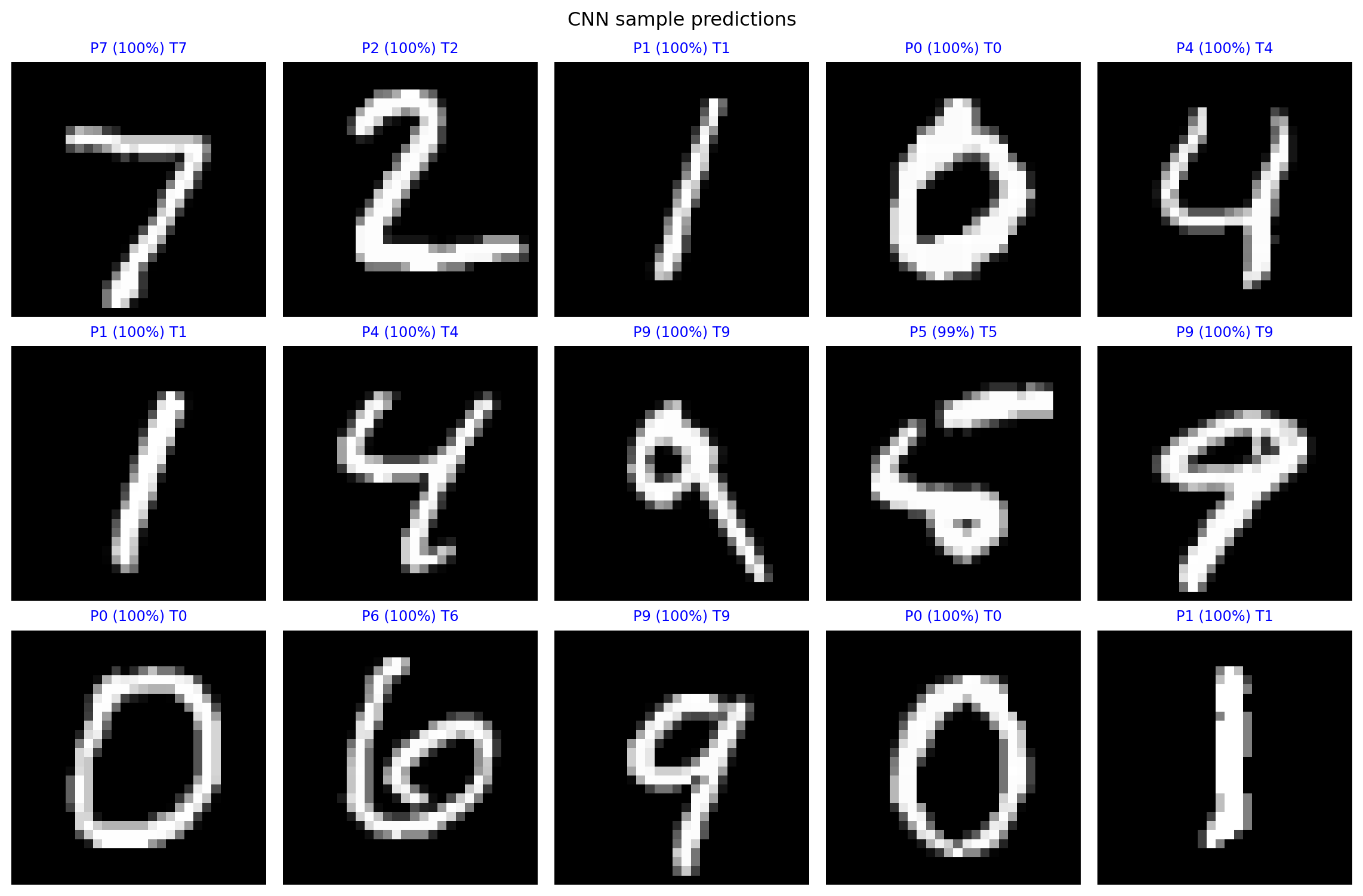

# CNN predictions grid

preds = cnn.predict(x_cnn_test, verbose=False)

fig, axes = plt.subplots(3, 5, figsize=(12, 8))

axes = axes.ravel()

for i in range(15):

ax = axes[i]

ax.imshow(x_test[i], cmap='gray')

p, t = preds[i].argmax(), y_test[i]

c = 'blue' if p == t else 'red'

conf = preds[i].max() * 100

ax.set_title(f"P{p} ({conf:.0f}%) T{t}", color=c, fontsize=9)

ax.axis('off')

plt.suptitle("CNN sample predictions"); plt.tight_layout(); plt.show()

This model is more accurate than the MLP model, it has fewer mistakes.

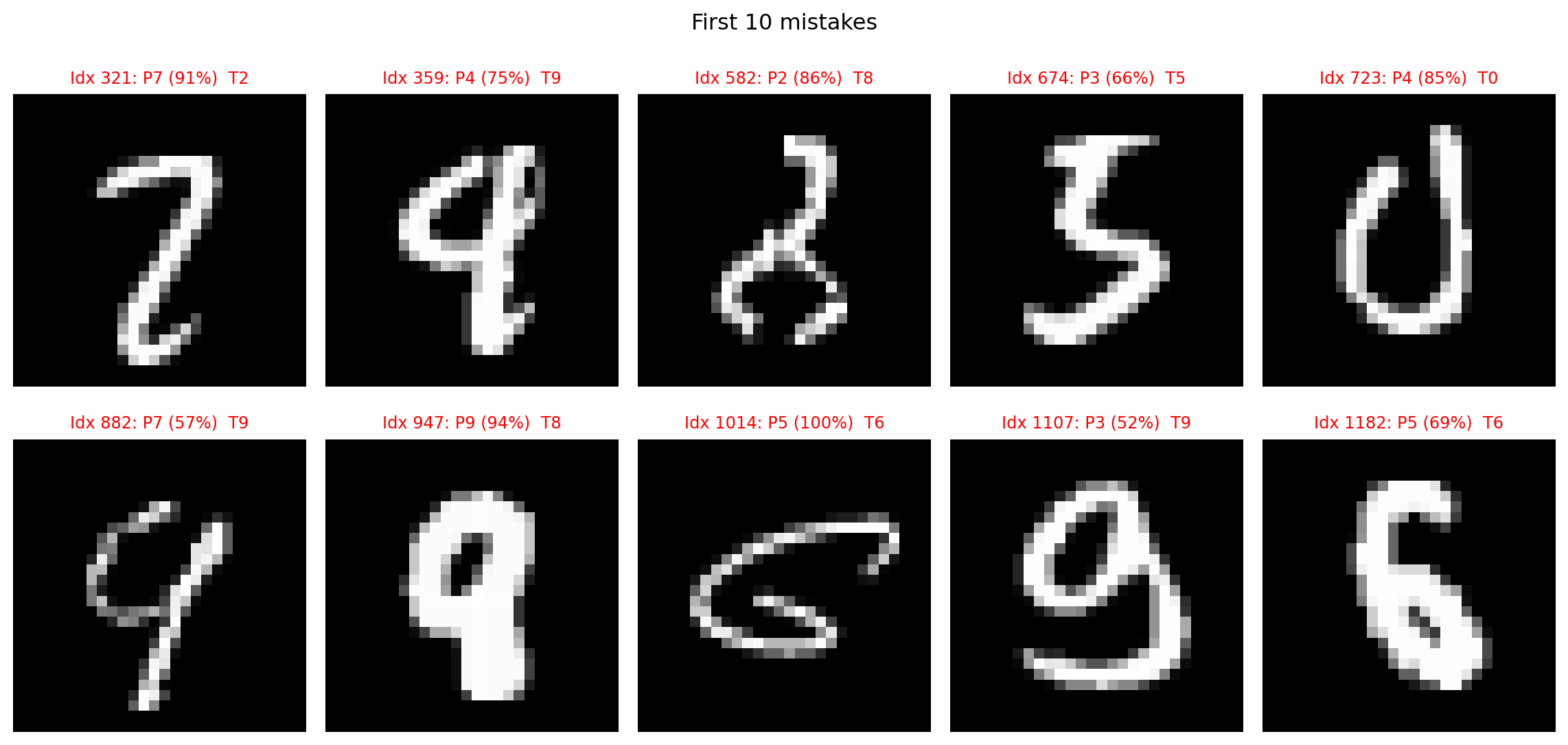

wrong_idx = np.where(np.argmax(preds, axis=1) != y_test)[0]

print(f"Total wrong: {len(wrong_idx)} / {len(y_test)}")

N = 10

fig, axes = plt.subplots(2, 5, figsize=(12, 6)); axes = axes.ravel()

for k, idx in enumerate(wrong_idx[:N]):

ax = axes[k]

ax.imshow(x_test[idx], cmap='gray')

p = preds[idx].argmax(); t = y_test[idx]

conf = preds[idx].max()*100

ax.set_title(f"Idx {idx}: P{p} ({conf:.0f}%) T{t}", color='red', fontsize=9)

ax.axis('off')

plt.suptitle("First 10 mistakes"); plt.tight_layout(); plt.show()Total wrong: 91 / 10000

The numbers that this model fails to recognize are very poorly drawn numbers that humans would have a hard time classifying. This is a good sign that our model is not overfit, or simply memorizing the dataset.

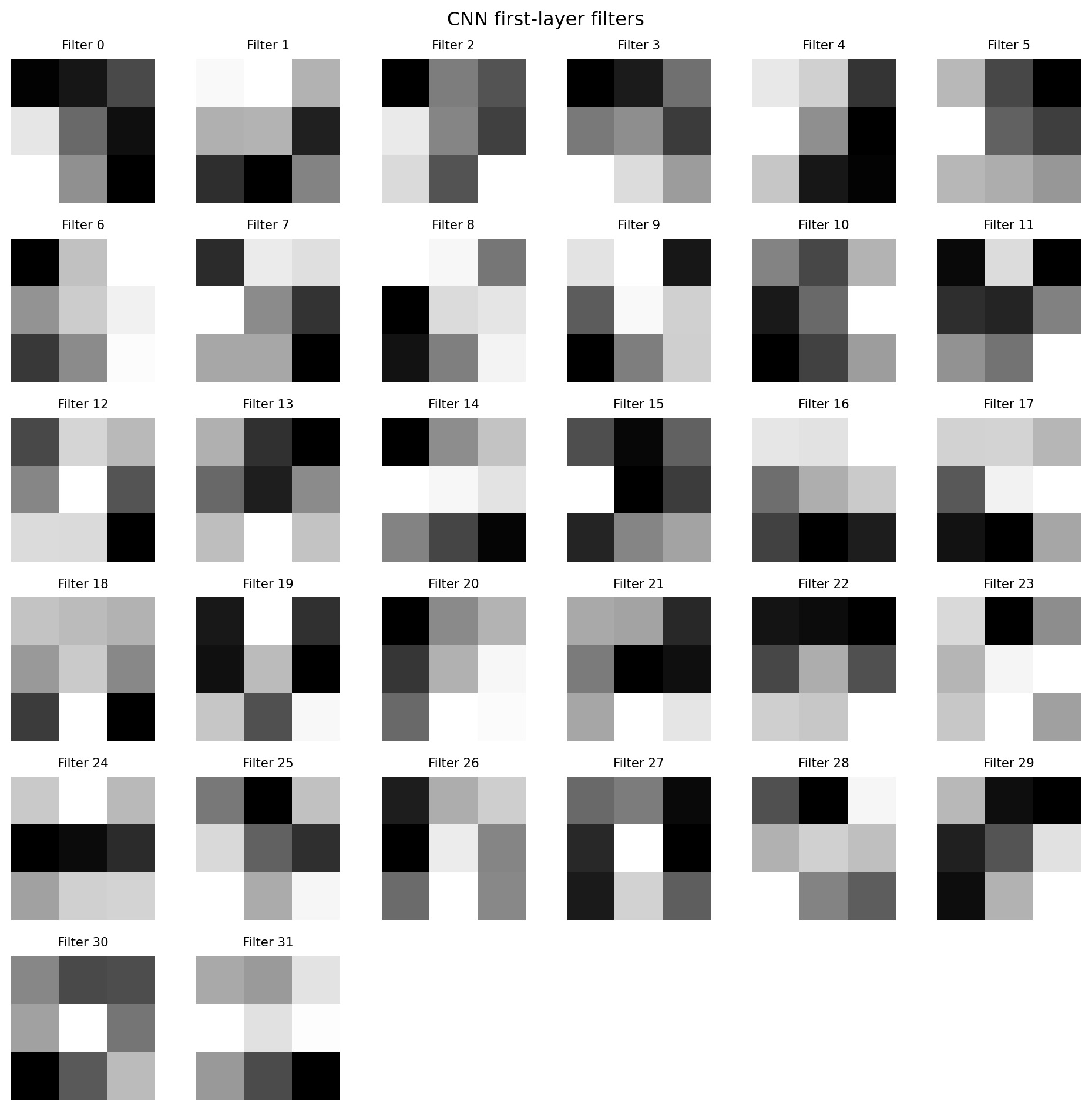

12.3.10 CNN Weights Visualized as Kernels

# CNN filters

filters, _ = cnn.layers[0].get_weights() # (3,3,1,32)

filters = (filters - filters.min()) / (filters.max() - filters.min())

plt.figure(figsize=(10, 10))

for i in range(filters.shape[-1]):

plt.subplot(6, 6, i + 1)

plt.imshow(filters[:, :, 0, i], cmap='gray')

plt.title(f'Filter {i}', fontsize=8); plt.axis('off')

plt.suptitle("CNN first-layer filters"); plt.tight_layout(); plt.show()

These feature maps seem to be optimized to recognize specific features, we cannot be entirely sure until we see how these kernels are applied to an example.

12.3.11 Feature maps

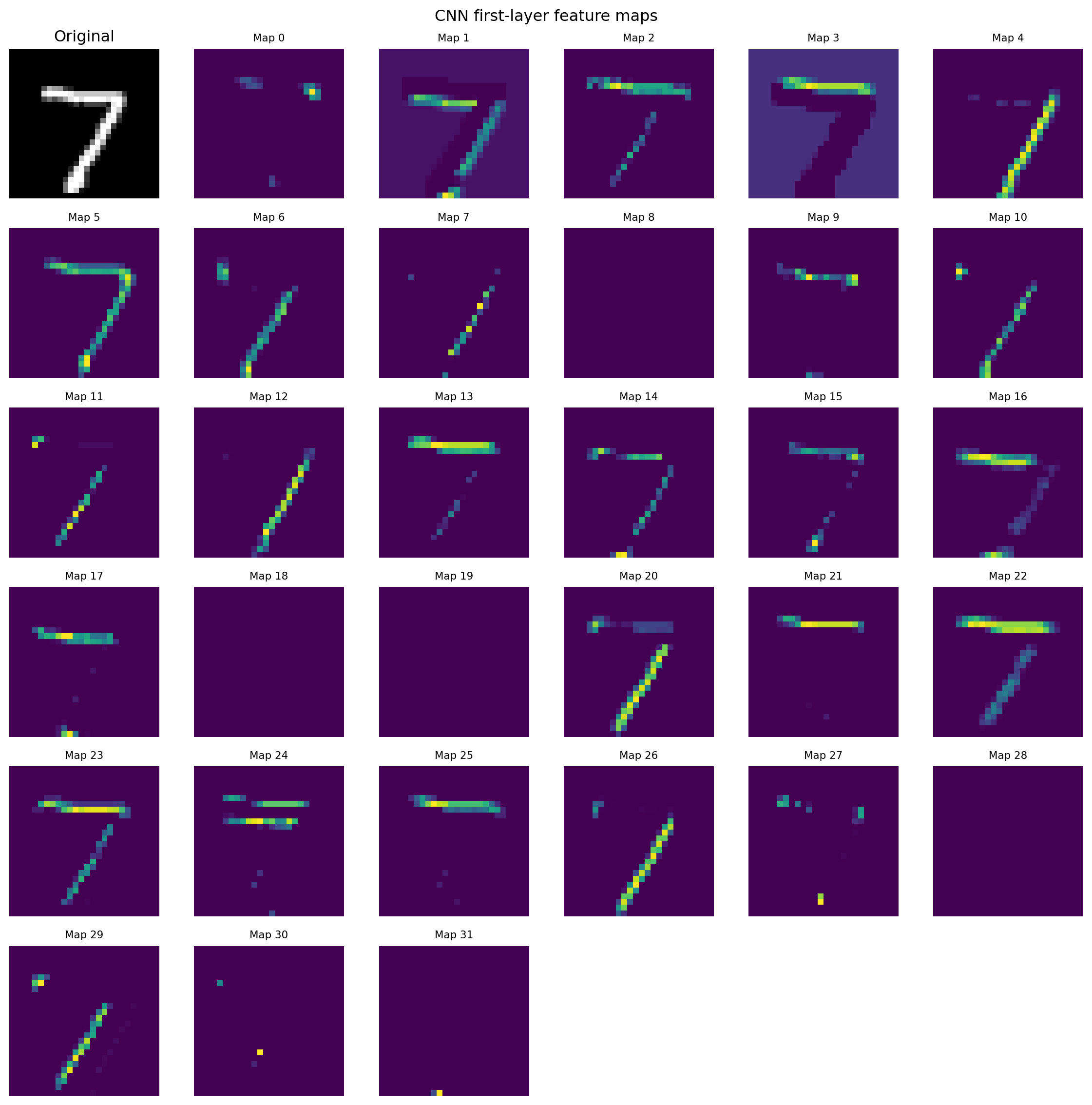

The code below essentially recreates the first convolution layer of our the with the weights that we found in training, and retrieves the outupt of this one layer throught “predicting” the given input image.

We take a single image from the set (x_cnn_test[0]), adding a batch dimension to it (np.expand_dims), feeding it into the extractor model to get its feature maps (or activations of a specific layer), and then removing the batch dimension from the output so fmaps holds just the feature maps for that one image.

# feature maps for the first test image

extractor = keras.Model(inputs=cnn.inputs, outputs=cnn.layers[0].output)

fmaps = extractor.predict(np.expand_dims(x_cnn_test[0], axis=0), verbose=False)[0]

plt.figure(figsize=(12, 12))

plt.subplot(6, 6, 1); plt.imshow(x_test[0], cmap='gray')

plt.title("Original"); plt.axis('off')

for i in range(fmaps.shape[-1]):

plt.subplot(6, 6, i + 2)

plt.imshow(fmaps[:, :, i], cmap='viridis')

plt.title(f'Map {i}', fontsize=8); plt.axis('off')

plt.suptitle("CNN first-layer feature maps"); plt.tight_layout(); plt.show()

We can see a clear correspondence between the learned kernels and the resulting feature maps: kernels that respond to edges, strokes, or curves produce bright regions where those patterns appear in the image. Some maps look blank because their kernels have been tuned to features that happen to be absent from this particular digit; consequently the neuron’s activation is low (about 0) and the map remains dark. Conversely, feature maps with many bright pixels indicate strong kernel responses, which—after pooling and downstream layers—drive higher activations in the neurons responsible for predicting this digit.

12.3.12 Conclusion

Ultimately, the CNN model achieves superior image-classification accuracy by learning spatially coherent features in a way that mirrors how we naturally perceive images—deciphering edges, textures, and shapes through its layered convolutional filters.

12.3.13 Further readings

3Blue1Brown videos on MLP:

Erai video on CNN: